LLMs

It will be helpful to learn the power and functionalities of LLMs, so you can understand how your product can leverage them. By the end of this lesson, you should be able to understand the different ways you can customize LLMs to fit your needs.

LLM Functionalities

In understanding what AI can and can’t do, and how to turn your AI product into reality, it’s very useful to understand the different custom AI options that are currently available. The following is a brief summary of the current functionalities that llms can be used for, and how they can be used.

Understanding what AI is good at vs. not will help you save time and money!

LLM Customization and How it Works

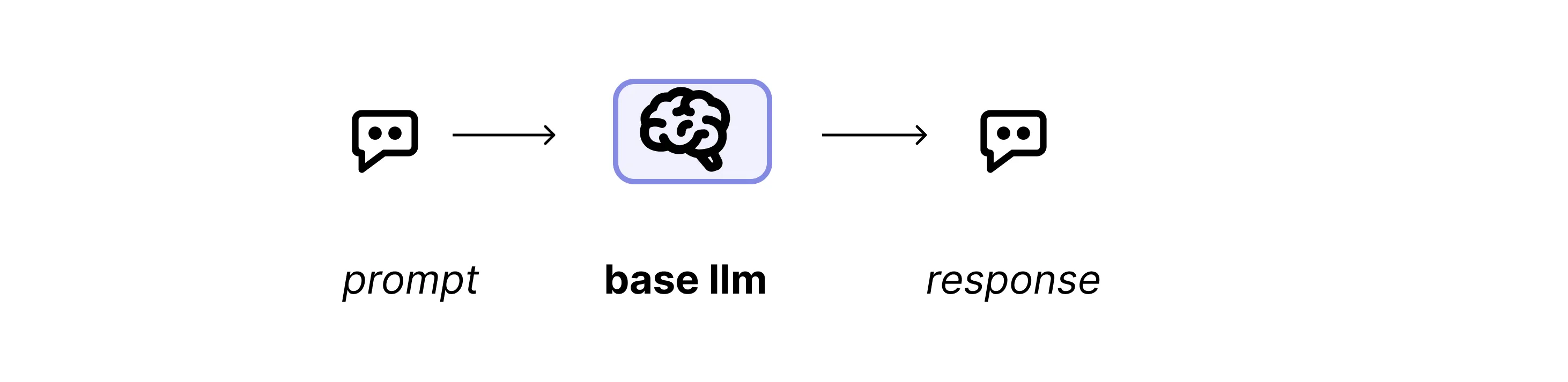

Prompting.

This is the most basic functionality of LLMs. You give them a prompt, and the model generates a response. This method uses the pre-trained models without any additional customization. The best LLMs are already quite powerful, so many applications can be built using just prompting!

Example applications:

- Smart Recipe Generator: Generate a recipe based on what the user has in their fridge.

- Learning Assistant: Pick a topic to learn about, and the model will show you a list of resources (Youtube videos, Reddit threads, etc.) to learn about it. It will also summarize the content, and direct you to the most relevant resources.

- Home Decor and Interior Design Assistant: Show the AI your room, and give it some style and budget preferences, and the model will generate a list of items to buy to decorate the room and furniture placement recommendations.

- Calorie Counting App: Take a picture of your food, and the model will tell you the calorie count. It can log your food intake, and give you a calorie deficit or surplus.

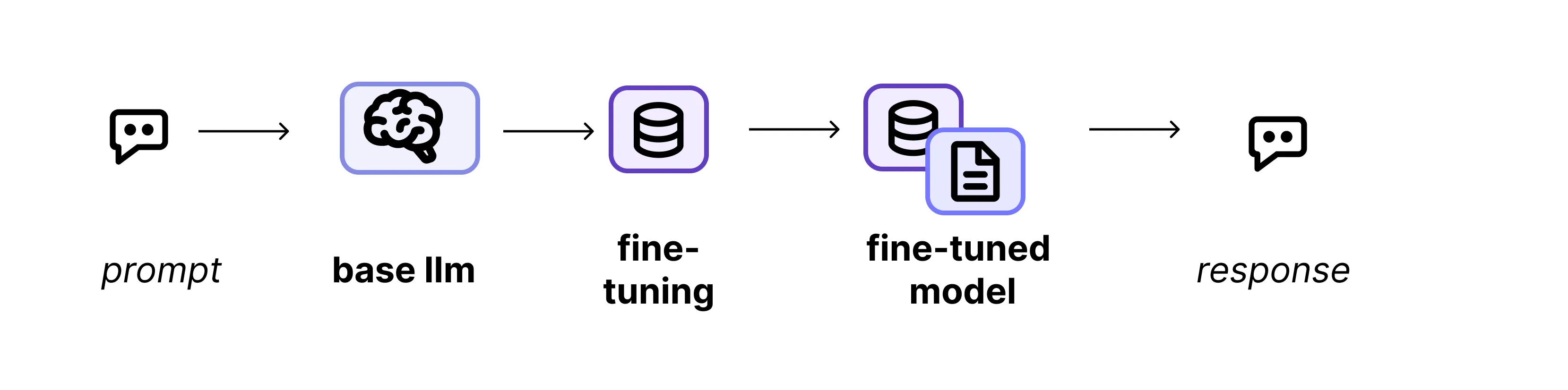

Fine-tuning.

Chatbots are quite general since they answer questions and prompts for very broad tasks and formulate their answers based on all the data they were trained on. Fine-tuning customizes an LLM to perform specific tasks or respond in a particular style by training it on a small, domain-specific dataset. This is useful for creating specialized applications that require knowledge or tone not covered by the general training data. You can think of fine-tuning as a way to teach the LLM to do a specific task in a particular way.

Example Applications:

- Customer Service Chatbot: Fine-tune to respond in a company’s brand voice or to handle specific customer scenarios.

- Educational Tutor: Fine-tune on curriculum content to provide relevant explanations and solutions to specific course material in a specific style (supportive, concise, doesn’t reveal answers right away, etc.).

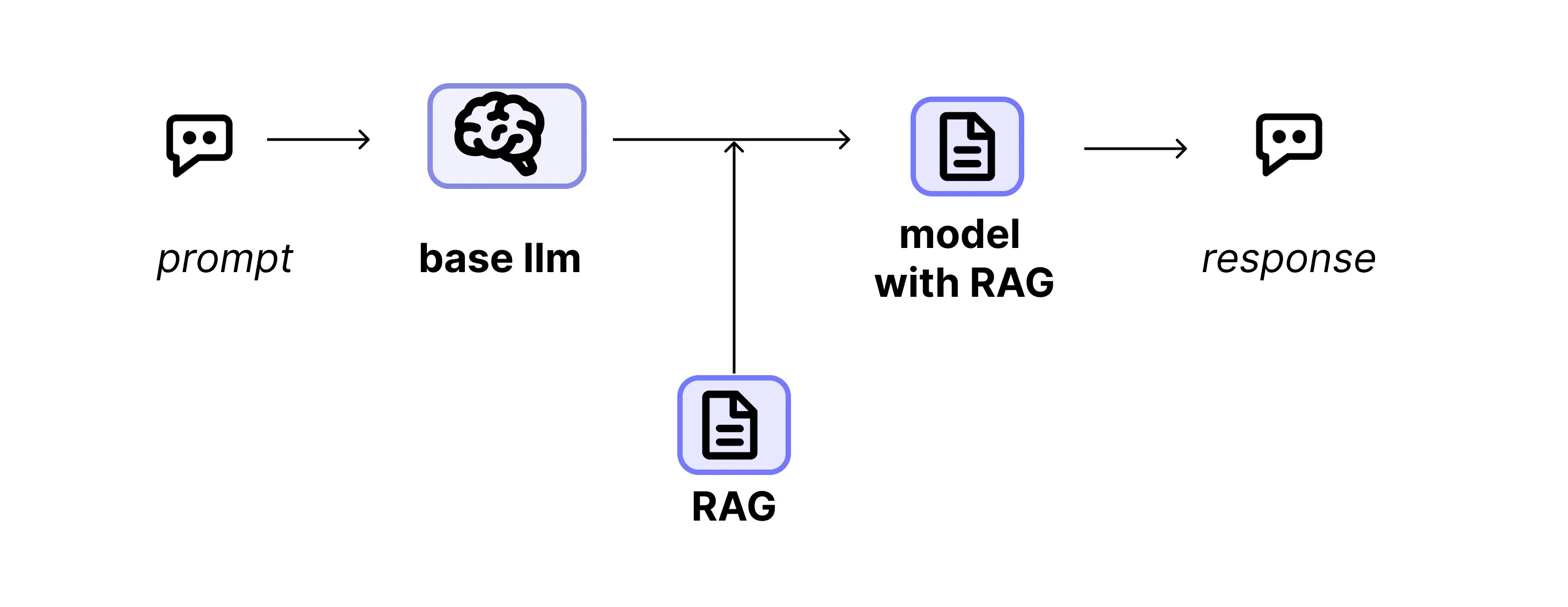

RAG.

Imagine you’re building an application that needs to answer specific questions using documents or data from an internal company database. You can use RAG to allow the LLM to answer questions based on data retrieved only from your internal database. These databases can be anything ranging from the documents on your own computer to a large internal company database.

Example Applications:

- Internal Knowledge Base Assistant: Retrieve documents, chats, or emails from a company’s - internal database to answer employee questions (for example, about company policies, how to resolve technical issues, design documents, etc.).

- Legal or Medical Advisor: Fetch specific legal statutes or medical guidelines to provide accurate advice.

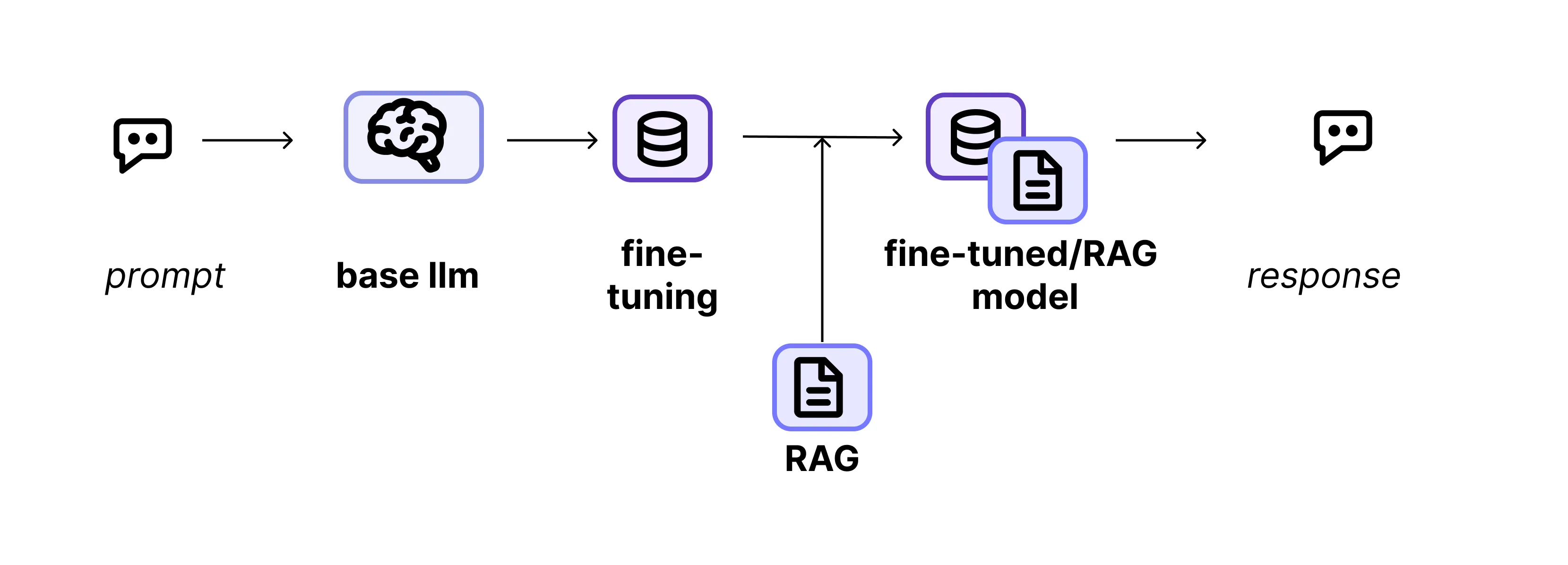

Fine-tuning + RAG.

Combining Fine-tuning and RAG allows for even more powerful applications. In this approach, the model is first fine-tuned on domain-specific data

to learn context and language style. Then, RAG is used to fetch the most relevant information from an external database. This allows the model

to answer questions based on the most relevant data. It combines the best of both worlds: the ability to answer questions in a way that is tailored to the user’s needs,

while also being able to answer questions based on specific datasources.

Example applications:

- Game master: A game master that is fine-tuned on explaining game rules, creating a narrative that mirrors game’s story (if relevenat), explains game strategies in a way that’s responseive to real-time game events, and uses RAG to fetch the most relevant information from the game’s rules.

- Emotional Support Bot: An empathetic, compassionate bot that can give real-time support (from fine-tuning) to people in crisis, leveraging guided meditation scripts, self-help articles, and mental health toolkits (from RAG).

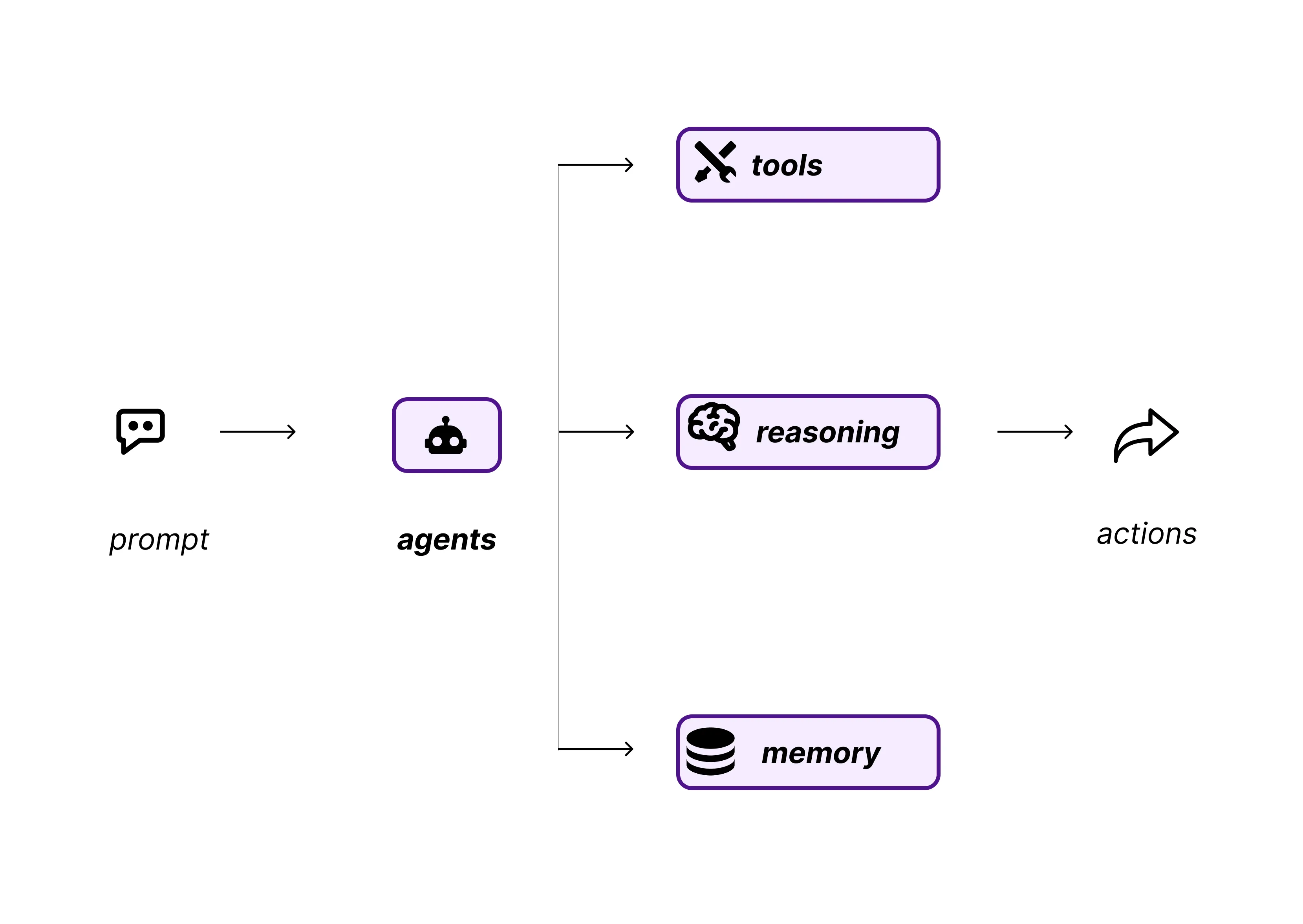

Agents.

Agents are a way to use LLMs to make decisions and take actions. They orchestrate multiple tasks or tools to complete a complex workflow. They can integrate with external APIs or databases, enabling dynamic and interactive applications.

Example applications:

- Restaurant Reservation System: Curate a list of restaurants based on user preferences and real-time availability, and then make a reservation for them.

- Task Automation Assistant: An agent that schedules meetings, sends emails, or performs administrative tasks.

- Financial Trader: Integrates with financial APIs to analyze stock data and make trading decisions.

Custom ML Models.

Custom ML models are a way to train a model on a specific dataset. This is useful for creating specialized applications that require knowledge or tone not covered by the general training data.

Example Applications:

- Self-Driving Car Ml-models: Self-driving cars process real-time information from cameras and sensors to make decisions. A self-driving car model would be trained on a dataset of images and sensor data to make driving decisions.

- Alpha-Fold: A model that can predict the structure of a protein from its sequence, to revolutionize drug discovery.

- Medical Diagnosis based on Imaging: A model that can diagnose a disease based on a patient’s X-rays (tuberculosis, retinal disease, cancer, fracture, etc.)

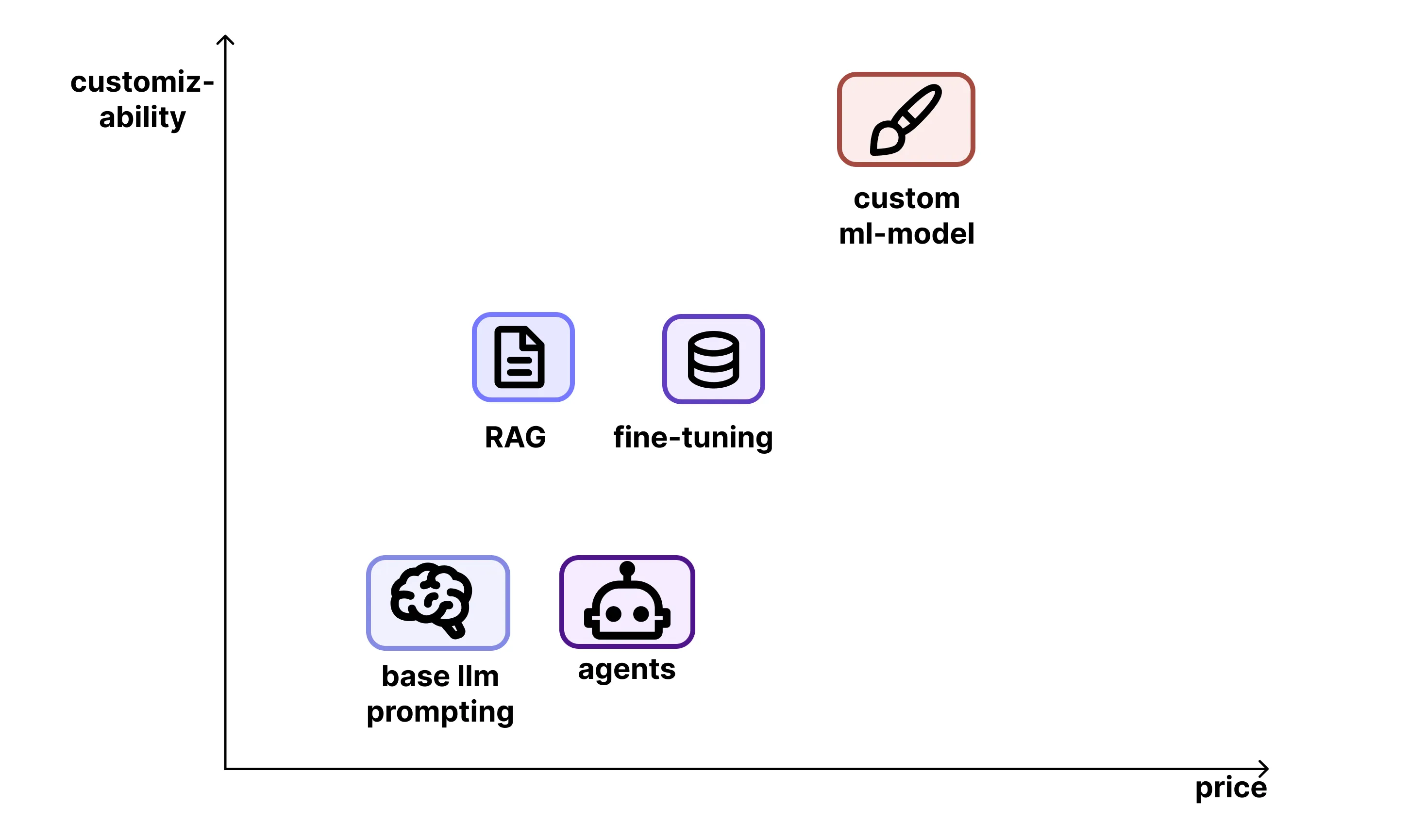

Comparisons

Below is a table that summarizes the different ways you can customize LLMs, and some different use cases for each.

| Concept | Definition | Use Cases |

|---|---|---|

| Prompting | Crafting and optimizing input text to guide an AI model’s response. | Chatbots, content creation, code generation, data extraction. |

| Fine-Tuning | Training a pre-existing AI model on a smaller, domain-specific dataset to improve its performance. | Customer support bots, legal/medical AI assistants, AI-powered tutoring. |

| Retrieval-Augmented Generation (RAG) | Combining information retrieval with text generation to enhance accuracy and reduce hallucinations. | AI assistants with real-time data, research summarization, enterprise AI applications. |

| Agents | Autonomous AI systems that make decisions, take actions, and interact dynamically. | AI-powered assistants, workflow automation, code generation, market research bots. |

| Custom Machine Learning Models | Designing, training, and deploying models tailored to specific use cases instead of using pre-built solutions. | Fraud detection, recommendation systems, medical diagnostics, financial analysis. |

Now that you know the different functionalities, it is helpful to understand the tradeoffs in using each. The following table summarizes the tradeoffs (i.e. how complex it is to implement, the amount of data/compute required, the cost, the complexity, etc.).

Comparing LLM Functionalities

| Method | Basic Functionality | Complexity | Data/Compute Req | Price | Customizability | Business Use Cases | Implementation Effort |

|---|---|---|---|---|---|---|---|

| Prompting LLM | Generate responses with carefully crafted prompts; fast, low effort. | ★☆☆☆☆ | ★☆☆☆☆ | $ | ★☆☆☆☆ | General-purpose use: quick tasks, chatbots, content generation, brainstorming ideas. | LO |

| Fine-Tuned LLMs | LLM is tailored to specific tasks or domains by training on additional data. | ★★☆☆☆ | ★★★★☆ | $$ | ★★★★☆ | Domain-specific tasks: customer support, technical writing, product recommendations, research assistance. | MED |

| LLM with RAG | Combines LLM outputs with retrieval from external data sources for real-time knowledge. | ★★★☆☆ | ★★★☆☆ | $$ | ★★★★☆ | Knowledge-intensive tasks: legal queries, medical diagnostics, enterprise data retrieval, personalized recommendations. | MED |

| LLM Agents | Use LLMs for multi-step reasoning and orchestration across tools/APIs. | ★★★★☆ | ★★★★☆ | $$$ | ★★★★☆ | Complex automation: customer workflows, advanced research, multi-step decision-making, API orchestration. | MED |

| Custom ML Models | Fully tailored model built from scratch for a specific task or domain. | ★★★★★ | ★★★★★ | $$$$$ | ★★★★★ | Highly specialized tasks: fraud detection, unique domain applications, proprietary systems, custom business logic. | HIGH |

Practice Problems:

- Let’s consider an AI sports performance analysis app that uses pose estimation and machine vision to help althletes correct posture and movements in order to improve their performance and reduce the risk of injury. Which kind of LLM functionality should we use and why?

Click to see answer

✅ Best Answer: Custom ML Model💡 Why?

- LLMs are text-based, whereas pose estimation and movement analysis require computer vision models (e.g., CNNs, PoseNet, OpenPose).

- This app needs real-time video analysis, which LLMs cannot perform effectively.

- Custom ML models trained on athlete biomechanics are better suited for detecting posture errors and providing corrective feedback.

- However, an LLM could assist by explaining corrections in natural language based on the vision model’s results.

Click to see answer

✅ Fine-Tuning + RAG (For Personalized Coaching): **RAG**: - The AI needs to retrieve expert sleep guidelines and CBT-I therapy techniques from a trusted knowledge base. - Sleep science updates frequently, so fine-tuning alone would become outdated. - If users ask medical questions, the AI can pull trusted sources instead of relying on general LLM knowledge. **Fine-tuning**: - If the app wants a consistent coaching tone while still retrieving medical insights, fine-tuning an LLM on sleep coaching dialogues + RAG for real-time sleep science would be ideal. ⚠️ Why Not Just Prompting? - General LLM prompting might provide generic sleep advice, but it won't personalize insights to the user's specific sleep patterns or pull in latest research.Click to see answer

✅ LLM Agents This app needs to take actions (booking flights, making reservations, checking user schedules). Agents allow the AI to orchestrate multiple APIs: - Access calendar data 📅 - Query flight booking systems ✈️ - Reserve restaurants 🍽Click to see answer

✅ Agents (For Interactive Tasks):If the app provides real-time, hands-on feedback (e.g., guiding a user through a cooking or car repair process), it may need an agent to:

- Interpret user inputs (e.g., “What step are you on?”)

- Adjust recommendations dynamically

- Access external APIs (e.g., fetching job application deadlines, car repair tutorials)

✅ Fine-Tuning + RAG (For Personalized Coaching):

- If the app wants a consistent coaching style, fine-tuning an LLM on high-quality step-by-step instructional content could be useful. RAG would then retrieve latest info, prices, legal regulations, or new techniques.

- Fine-tuning would allow the app to customize the coaching style to the user’s preferences and needs.

⚠️ Why Not Just Prompting?

- Prompting alone could generate general how-to guides, but it wouldn’t adapt to the latest trends, user inputs, or real-time market data.

- For example, mortgage advice from a static prompt could be outdated, but RAG could fetch today’s rates.